Experimentation platform with AB department infrastructure

Everything you need for a fully operational AB department: request intake, planning, professional statistics, archive, and performance analytics

Everything you need for a fully operational AB department: request intake, planning, professional statistics, archive, and performance analytics

No need to build complex infrastructure. Just three components — and you have a complete startup AB department with processes and analysis.

Any traffic splitting system convenient for you

• Many ready solutions

• Paid and free options

• Or your own development

Complete department infrastructure

• Customer request processing

• Backlog planning

• All the math under the hood

• Results storage

• Team sharing

• Team performance analytics

Analyst or manager for infrastructure management

• Processes requests

• Launches experiments

• Shares results

All necessary infrastructure in days, not months

Team submits experiment requests in one place. No Notion, Jira, and Excel spreadsheets

Data flows from your system via API automatically. Forget manual exports

Each test with all metrics, segmentation, conclusions, and recommendations in a unified format

Experiment calendar, duration calculation, overlap control. Full planning infrastructure

All employees can view experiment results. Transparency and team learning

Knowledge base for retrospectives and onboarding new team members. Department memory

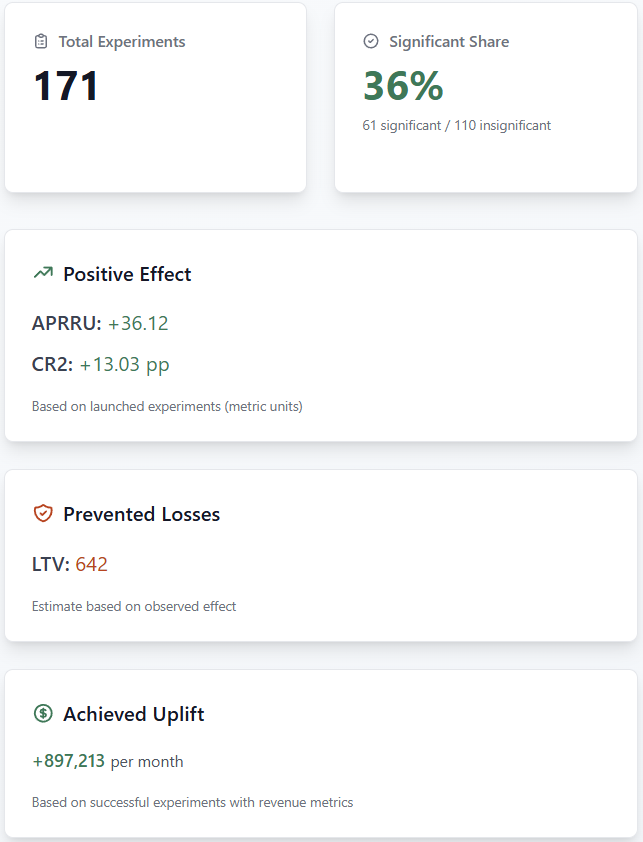

Win rate, launch speed, team efficiency. Metrics for department management and strategy improvement tips

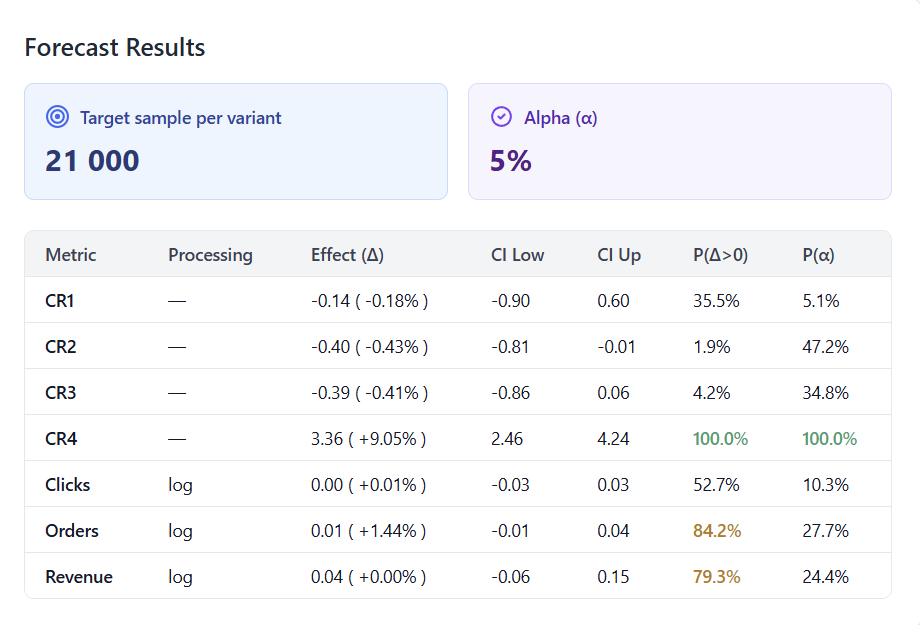

All modern analysis methods available with one click, no manual calculations

Automatic interpretation of results and generation of conclusions for the team

Simple and clear process from idea to results

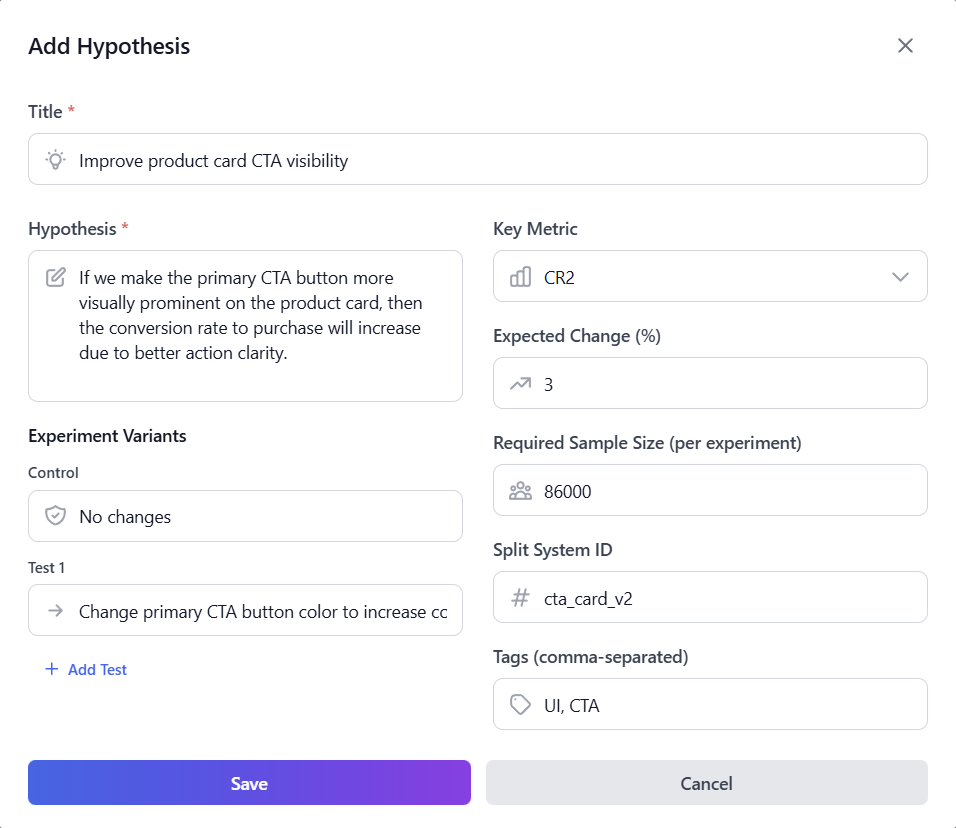

Any company employee creates a card in the "Hypotheses" section and describes what they want to test

Analyst calculates required experiment duration and plans launch dates in the calendar

Analyst launches the experiment in your split system and starts collecting data

Experiment data automatically flows into AB-Labz for health monitoring and results analysis

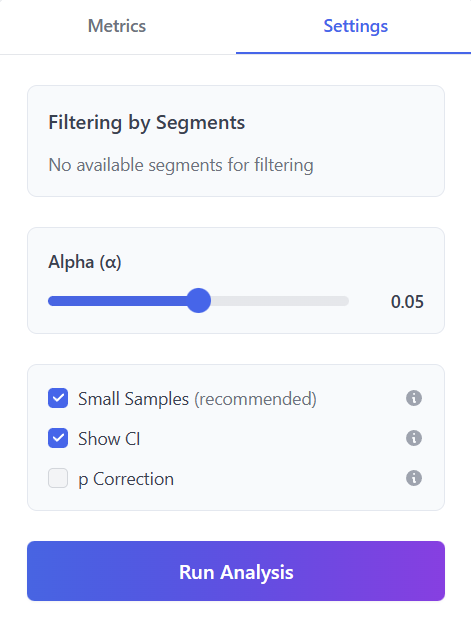

Analyst launches statistics calculation with one button — the system automatically selects methods and calculates everything needed

AI assistant analyzes results and automatically writes clear conclusions, recommendations, and ideas for future experiments

Analyst shares a link to results with the team — everyone can review conclusions and experiment data

From idea to results — all in one system

Analyst spends minutes instead of hours at each stage, and the team gets full transparency of the process

AB-Labz optimizes experimentation routine. Let analysts focus on research

AB team speed boost

analyst to manage entire department

format style for all experiments

Manual scripts

No need to keep hypotheses in Notion, calculations in Python scripts, and results in Confluence. AB-Labz is a unified space where an idea goes through the complete journey from conception to statistical validation.

Modern professional analysis methods with one click, no manual calculations

Automatic validation of 10+ distribution characteristics to select the best data preparation method

Right statistical test for each metric based on its type and distribution characteristics

Correct conclusions even on small samples

Early stopping without losing correctness

Don't wait months for sufficient data. AB-Labz applies advanced statistical methods for correct work with limited data volumes.

Get reliable confidence intervals even on samples of a few hundred observations

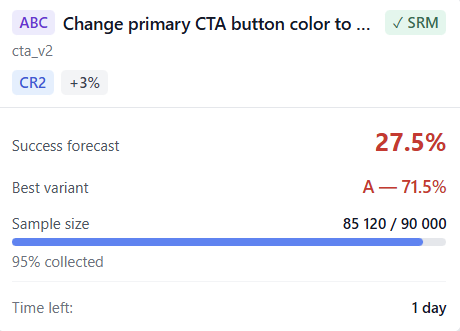

Stop experiments early with prediction of significance achievement probability

All methods account for small sample specifics and don't increase false positive rate

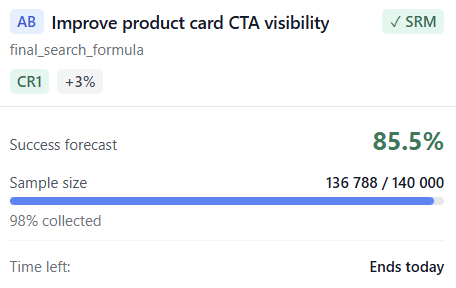

In classical A/B testing, premature viewing of results leads to statistical distortion. AB-Labz applies sequential testing, allowing you to track experiment success probability during its execution.

Forecast shows where experiment is heading without violating statistical correctness

Automatic validation of correct user distribution between groups

See how much is collected and how much remains until planned experiment size

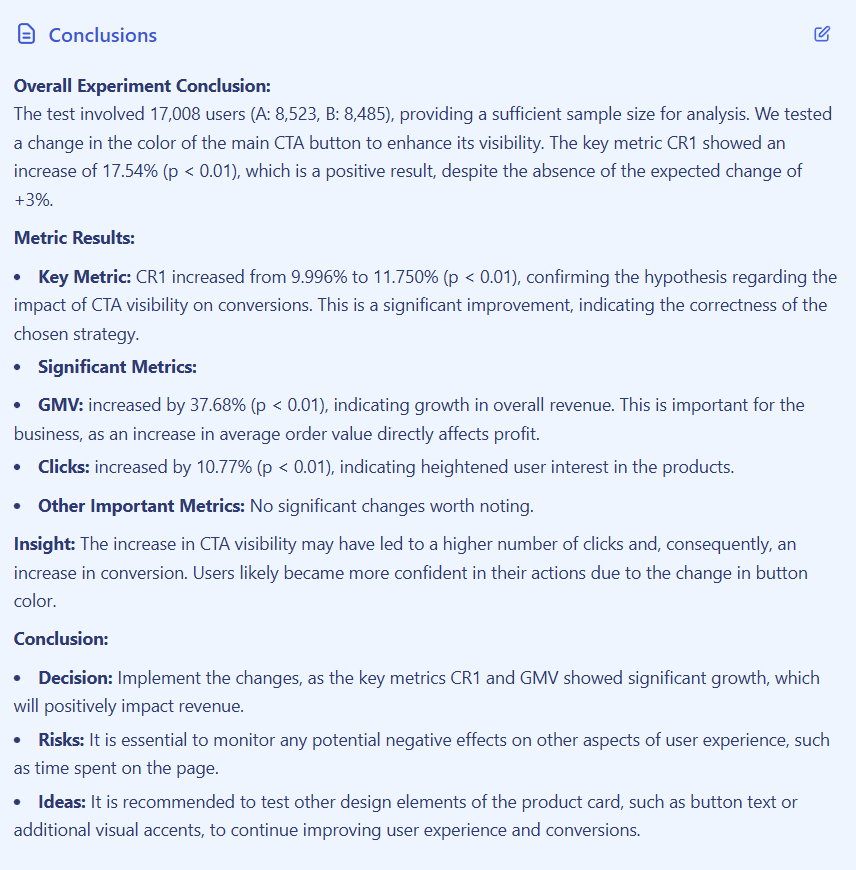

No need to interpret statistics yourself. AI analyzes experiment results and prepares clear conclusions in one click.

Automatic formation of textual conclusions based on experiment results with statistics interpretation

AI provides specific recommendations: roll out changes, continue test, or reject hypothesis

Based on current results, AI suggests ideas for next hypotheses and experiments

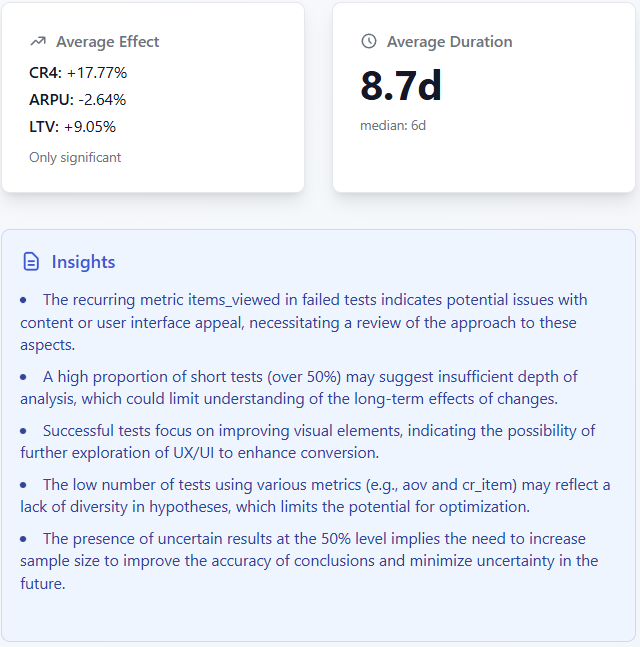

Running tests is not enough — it's important to analyze the entire history. AB-Labz aggregates statistics across all experiments and identifies patterns, helping the team grow and improve hypothesis quality.

Win rate, average test duration, most effective metrics — all company statistics in one place

AI analyzes dozens of experiments and finds systemic issues: low win rate on mobile, too short tests, frequent SRM violations

Team sees what works and what doesn't. Gradually win rate and experimentation efficiency increase

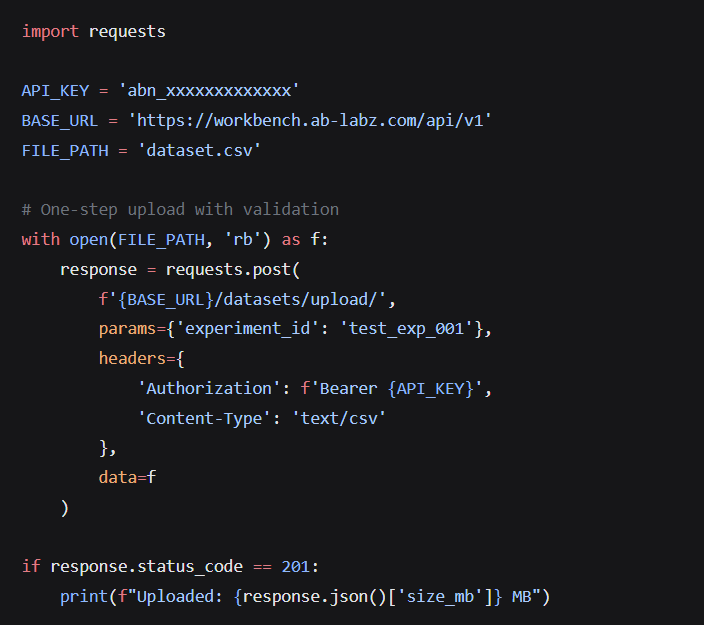

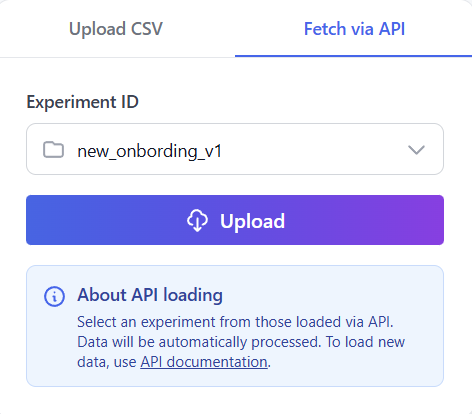

Connect your system via REST API. All experiments will automatically appear in the interface, ready for analysis.

Set up data sending once — no more manual uploads needed

Data loads automatically on convenient schedule

REST API with authentication and data validation

AB-Labz is in closed beta testing. We're looking for teams to help us make the product better.

Full functionality

First 3 months after beta

Your feedback will be prioritized

Closed beta test will run until March 31, 2026

Not necessarily. The system automatically selects methods and identifies data issues, and the AI assistant helps understand the tables. But having an analyst with statistical understanding will help correctly interpret results in complex cases.

AB-Labz doesn't manage splitting — your system does that. We focus on professional statistical analysis and process management. Adaptive methods, smart preprocessing, small sample tools — things standard platforms don't have.

No. The system automatically selects methods and prepares data. But we provide detailed documentation so you can correctly interpret results.

Yes. You manage traffic in your system and analyze in AB-Labz. AB-Labz connects directly to prepared data mart, not raw logs.

Any: conversions, averages (LTV, revenue), ratios (CTR, average check). The system automatically selects the right test and data preprocessing for each metric type.

We use Monte Carlo resampling and Bayesian forecasting for correct work with samples starting from 300 observations per group.

Data is stored encrypted during the experiment and deleted after analysis completion. API uses Bearer tokens for authentication. Each organization is isolated.

Yes. You can analyze experiments with any number of groups. The system automatically determines group count and applies appropriate test classes and data preprocessing.

To participate in beta testing, register on AB-Labz Workbench and send us a message through the feedback form.